Contributors: Ernest Wong, Charles Covey-Brandt, and Michael Maltese

Once upon a time, in the land of Disqussia, two engineers were given a design for a paper plane and asked to figure out whether or not it flies. One of the engineers took out a piece of paper and started solving aerodynamic equations. The other engineer took out a piece of paper and started folding it up, then gave it a flick and watched it soar …

Sometimes, circumstances call for a paper plane proof—a “build first, validate second” approach that applies to situations where the task of building is actually easier than the task of validating. In this blog post, we are going to walk through a real-world case where we used this approach at Disqus. The case revolved around an unorthodox idea, and the problem of how to validate it.

Sometimes at Disqus we have to abandon the tried and true, vetted and validated ways of doing things in order to increase performance—like over a year ago, when we moved a major component of our split testing system from the back end to the front end. The component we moved is what we call “bucketing”. It’s when we put a user into one test group or another—the users within one group, or bucket, experience a different version of the product than the users in another bucket. For split testing, you want bucketing to be randomized so as not to introduce bias into the data you gather from the test. Conventionally, bucketing is done server-side because—and this is the reason the idea is unorthodox—randomization is easier to ensure when the process is centralized. However, because of performance optimizations we were making on the back end, it became increasingly difficult for us to keep the bucketing process there. (By the way, I’m not going to go into any detail about these server-side performance optimizations, the subject of which could be a blog post by itself. Suffice it to say it involved heavy caching.)

The Solution ~ wat wat in the browser

Eventually we decided to sidestep the back-end bucketing problems we were facing by moving the logic into our front-end JavaScript code base. It so happened that we had already spent some time developing client-side code to generate unique ids (which we had previously verified to have tolerably low collision rates). We decided to leverage that code in order to do the bucketing. Without going too deep into the details, the idea is that we use JavaScript running in the client to generate a random id, which we persist client-side. Then, each time the user visits a page, we read that id and put it through a hashing algorithm with a good distribution to transform it into a discrete bucket number (in our case, an integer from 0 to 99). There is one obvious drawback with this approach: if a user visits the app using two browsers, it is likely that in one browser the user will be put into one bucket, and in the other browser put into another. In other words, we’re performing per-client bucketing, as opposed to per-user—just something to keep in mind.

The Concern: is client-side bucketing random enough?

I was quite skeptical of using client-side JavaScript to generate random ids. I am no expert in pseudorandom number generators (PRNGs), but my understanding is that the prescribed use is: give a PRNG an initial value (a seed), then call the generator function repeatedly. The set of numbers that come out of that function will have a uniform distribution. This is subtly different from what my teammates were proposing. Rather than call a single instance of the generator function repeatedly, they were suggesting that we use a huge set of generator function instances and call each of those instances once—i.e., in each of the millions of browsers that run our app, we would call either crypto.getRandomValues or Math.random once. In particular, I was concerned that because Math.random, our fallback, uses time as the seed, and we have many people accessing our app at the exact same millisecond, and our traffic is by no means evenly distributed over time (busy during the day, quiet at night), that our ids would not be very randomly distributed.

The difference between the prescribed usage of a PRNG and what we were proposing is somewhat analogous to the difference between rolling one die a hundred times versus rolling one hundred dice at once. You can imagine that if the dice you roll are not randomly arranged—imagine, for example, that each of them have the same side pointed downward before the roll—that the distribution of the hundred dice numbers after the roll will probably not look like a uniform distribution.

The Validation or: How I learned to stop worrying and love statistics

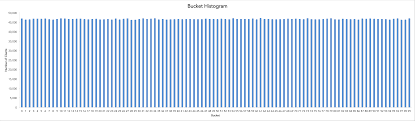

Rather than debate and theorize about the correctness of the approach, which could have taken a long time, one of my teammates, Charles, decided to simply build it, run it, and then check the results. All of the ids generated on the client were sent to our own data warehouse. Charles collected all of the ids—over 4.6 million of them—from a typical twenty-four hour period. He then ran the same hashing algorithm (using Node.js) on these ids that we used in the browser to assign the user to one of a hundred different buckets. What he found was a flat (i.e., uniform) distribution. In other words, they were the kind of results you would expect if you picked a number randomly from 0 to 99 … 4.6 million times.

The Conclusion ~ hakuna meta matatata

I’ve mostly stopped worrying since then. Sure, I have some doubts. We have not done an exhaustive analysis on our bucketing to ensure that, for example, users with MacBooks are not more likely to be put in some buckets more than others—or more pertinently, if browsers that do not support crypto.getRandomValues end up having predictable, confined bucketing.

However, even if there is a little bit of bias in the bucketing system, its consequences can be mitigated in at least two ways. One way is to employ the standard statistical technique of increasing your sample size—i.e., widening the set of buckets. In other words, if you see a significant change in your data correlated to a product change that you released to a small set of buckets, it’s probably a good idea to analyze that change across a wider set of buckets before incorporating the change permanently. The second way to mitigate risk is common sense: is the change that you are about to push one that you can live with or take back if it turns out the data you collected during the experimental release was severely flawed? If not, it may not be a great candidate for split testing. If you think about it, split testing is an embrace of the same philosophy espoused herein—when it costs less to “build first, validate second,” do that, rather than try to validate before building.

I would not bet my life on the randomness of our front-end bucketing. I would not want to use it to conduct real scientific experiments to draw far-reaching conclusions about the inherent nature of the universe. But for product development, we have already been using it for some time, and so far, it seems to work: it allows us to predict how a change in our product will correlate to a change in our data once released to the full set of users. And that’s all we are really asking of it.

And so we all lived happily ever after. The end. 📃✈.

Now, if you’re curious, you can take a look at the implementation in JavaScript.