Earlier this year, we committed to fighting hate speech and began taking the first steps toward curbing toxicity on Disqus. As a Product Analyst, I focus on developing technology to facilitate good content. Our goals for this technology are to enhance community management tools for moderators, give users more power to address abuse and toxic comments within the communities they participate in, and improve the internal tools that our Abuse team uses for reviewing reported content that violates our Terms and Policies.

What is “toxic” content?

Perhaps the most difficult and nebulous task we’ve recently taken on is defining the types of content we’re talking about when we refer to “toxic” comments. A toxic comment typically has two or more of the following properties:

- Abuse: The main goal of the comment is to abuse or offend an individual or group of individuals

- Trolling: The main goal of the comment is to garner a negative response

- Lack of contribution: The comment does not actually contribute to the conversation

- Reasonable reader [1] property: Reading the comment would likely cause a reasonable person to leave a discussion thread.

A toxic comment typically at least two of the properties listed above: while one might argue that “abuse” alone is enough to define a comment as “toxic”, abusive comments typically meet at least one of the other properties. The “two property” guideline also prevents comments that don’t add to the conversation (such as “haha”) and comments that provide an opposing viewpoint (such as a poignant post from a conservative expressing her views on a liberal website) from being flagged as toxic.

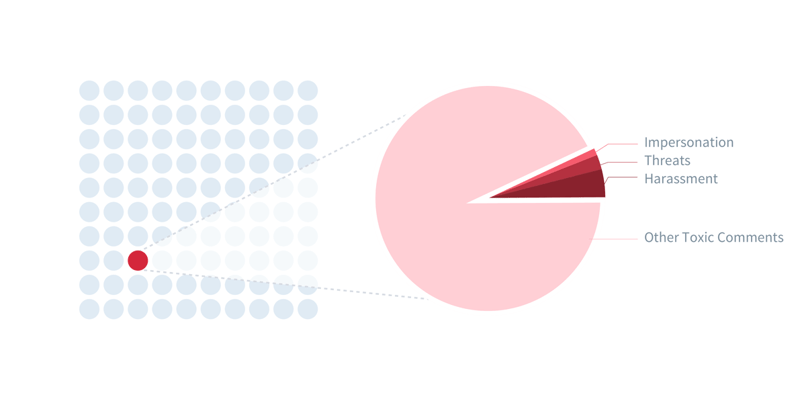

Three specific subsets of toxic comments (targeted harassment, impersonation, and direct threats of harm) are already explicit violations of our Terms of Service: while not all toxic comments meet this criteria, they still increase moderation burden and detract value from the communities they are posted in.

Fewer than 1% of Disqus’s comments are toxic, and not all toxic comments violate our Terms and Conditions

Introducing Apothecary: Disqus’ “barometer” for toxic content

We are developing a “toxicity barometer” - which we refer to internally as Apothecary - to process nearly 50 million comments Disqus receives on a monthly basis. The Apothecary technology is a combination of third-party services and our own homegrown data tools -- we feed comment text and the comment author’s information into Apothecary and it spits out a toxicity score. This score, in turn, will incorporated into the moderator toolbox and user- facing tools.

Developing Apothecary started with talking to publishers and moderators to understand the varieties and volume of toxic comments they deal with. Our publishers are a very diverse group so a “one size fits all” approach to improving moderation simply wouldn’t work. We know that what is acceptable language varies heavily across communities (global word and phrase filters don’t work) and this continues to be the leading challenge in Apothecary’s development. To augment our efforts, we’ve been testing third-party APIs, including Google’s Perspective API (which is used by the New York Times), to help us better identify toxic comments and score them.

Next steps for Apothecary

As Apothecary matures, we will be incorporating it into Disqus, starting with the moderation panel. We will incorporate a “toxic” option into our filters, allowing moderators to prioritize management of toxic comments. Additionally, we are looking at turning the scores Apothecary generates into suggested actions (for example: “we suggest you delete this comment”) for moderators to help reduce the time spent moderating.

Apothecary is in its infancy and we are really excited about its future. Some of the ideas for improving this service we have discussed internally (and want feedback on) include:

- Use more signals from across our extensive network of commenters, such as user reputation, user blocks and user reports, and comment flagging

- Utilizing machine learning to assign “moderation probability score” to each comment based on that site’s moderation history.

- Integrating with third parties to help us with illegal / graphic image detection, as well as terrorist recruitment videos.

- Continuing to test the effect of layering other third-party toxicity-scoring services into Apothecary to see if this improves our confidence in scores

- Sentiment analysis, such as IBM’s Watson

- Expanding the scope of Apothecary to include non-English comments

These proposals are aimed at reducing the burden of moderation and cleaning up spiteful, hate-promoting comments that detract from communities and discussions they appear in. We want to be as transparent as possible in how we tackle toxic content and will keep you posted on progress as we develop and implement this tool. As always, we want feedback from our community- so please keep the discussion going in the comments!

[1] Think of the “reasonable reader” like the “reasonable person” standard that is frequently referenced in U.S. law. I consider myself a "reasonable" person, but I spend a lot of time on the internet and seeing one racial slur in the comments section isn't going to make me leave the discussion. However, if that type of comment is common on a site I visit, I’ll sure be thinking twice about my involvement with that community.