The Toxicity Mod Filter, a new feature to help publishers detect and manage toxic comments, is now available for publishers across our network.

Toxic content is an ongoing challenge for publishers and readers. Too often toxic content results in losses of valuable reader engagement and increased moderation costs for publishers. A 2016 study from Data & Society, a technology-focused think tank, found that over 25% of Americans have at some point refrained from posting online due to fear of harassment.

Introducing the Toxicity Mod Filter

We've been developing technology to help publishers better handle problematic content to increase discussion quality and overall engagement. As part of this work, we partnered with WIRED to better understand toxicity and test technology for detecting it. Now we’re excited to share this technology with our publishers.

Leveraging Jigsaw's Perspective API, our new Toxicity Mod Filter uses natural language processing and machine learning to detect and tag comments in the Moderation Panel that have a high likelihood of being toxic. Moderators can use this information to prioritize their efforts and lower the negative impact of toxic content on their communities. While previously moderators relied on restricted words and user flagging in order to identify comments for moderation, they can now proactively identify toxic content and deal with it more effectively.

What is toxic content?

We define a toxic comment as one that typically has two or more of the following properties:

- Abuse: The main goal of the comment is to abuse or offend an individual or group of individuals

- Trolling: The main goal of the comment is to garner a negative response

- Lack of contribution: The comment does not actually contribute to the conversation

- Reasonable reader property: Reading the comment would likely cause a reasonable person to leave a discussion thread.

Getting started with the Toxicity Mod Filter

The Toxicity Mod Filter helps moderators make more informed decisions and moderate more efficiently. The filter applies to comments starting last week. Currently we are supporting English-language comments and we hope to release other languages in the future. This feature does not automatically moderate or pre-moderate comments based on toxicity.

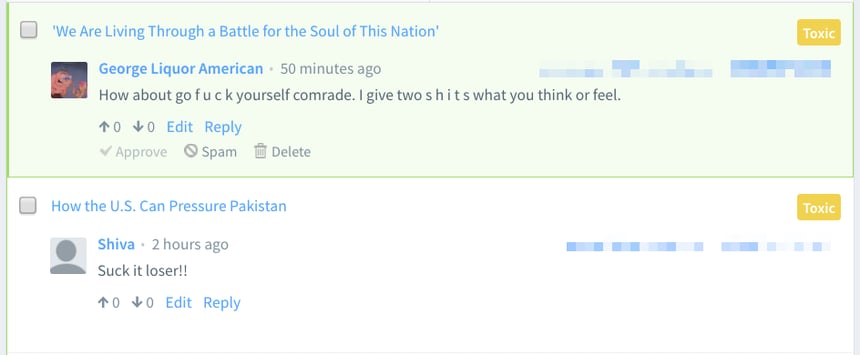

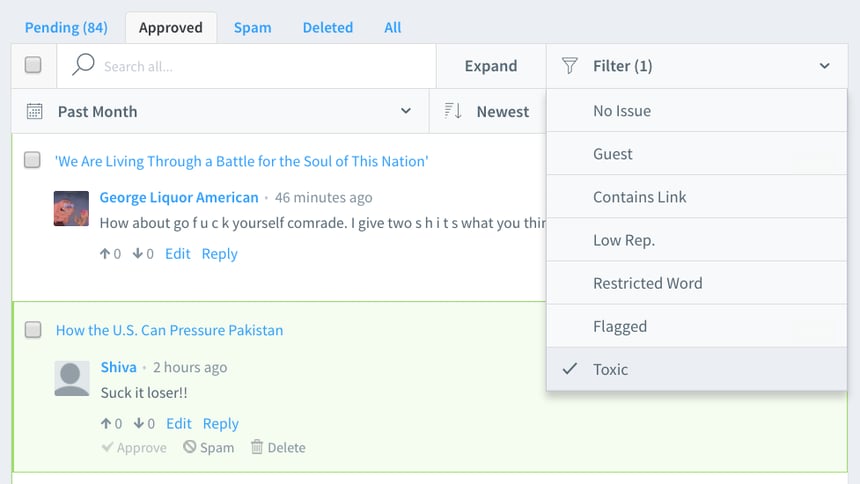

Toxic comments are tagged in the Moderation Panel. Moderators can use this information when deciding what, if any, moderation actions to take.

Moderators can filter by “Toxic” to see all comments marked as Toxic in any of the tabs in their Moderation Panels (Pending, Approved, Spam, Deleted, All).

Next steps

This initial release establishes a baseline of toxicity across our network. However, we know that what is considered acceptable and what is considered toxic will vary across different communities. To better address this, we're working on ways to incorporate community norms into toxicity scoring, with the hope of eventually providing more customized solutions for individual sites and communities.

We're also working to leverage additional signals from our network such as user reputation, votes, and historical moderation actions, in addition to the Toxicity Mod Filter, to help publishers automate heavily manual moderation workflows.

We look forward to hearing your feedback and continuing to improve the moderation experience for publishers while facilitating better discussions for communities. If you have any feedback or ideas on how we can improve, please let us know in the comments below. If you moderate comments, how do you plan to use the Toxicity Mod Filter? What areas would you like us to focus on next?